With strong leadership contributions from the OLCF, this year’s Smoky Mountain Conference set record attendance numbers, signifying increasing global solidarity in HPC strategies

2014 Smoky Mountain Conference featured OLCF leadership presentations

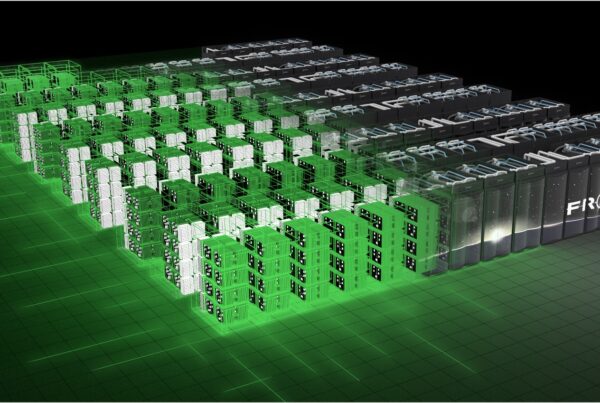

Creating the next generation of supercomputers doesn’t happen overnight. In fact, it takes years of strategic planning by many high-performance computing (HPC) experts—from software and hardware developers to users and investors.

That’s why representatives from the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL), Oak Ridge Leadership Computing Facility (OLCF), and HPC experts from around the world gathered in the green hills of Gatlinburg, Tennessee, from September 2 to 6 to design and discuss scientific requirements and future approaches in preparation for the coming of the exascale era.

This year’s Smoky Mountain Conference, themed “Integration of Computing and Data into Instruments of Science and Engineering,” embodied a wide range of science topics designed to advance the understanding of the state of the art in HPC, simulation science, and data analytics.

“We’re trying to hear from a broad set of thought leaders in our community on an array of science topics that can drive our capabilities out into the future,” said Jack Wells, event co-organizer and director of science at the OLCF, a DOE Office of Science User Facility. “This conference helped us understand more clearly the opportunities and challenges of the future, as well as build stronger partnerships and new capabilities.”

Scientifically Speaking

The first session focused on forward-looking presentations from diverse community experts regarding strategic scientific opportunities that can drive innovation in simulation science, data analytics, and HPC.

Sadasivan Shankar, Intel’s senior principal engineer and program leader for materials design, emphasized the importance of having strong relationships with vendors as well as industrial partnerships to keep scientific computing moving forward.

Joe Carlson, an OLCF user from Los Alamos National Laboratory and a lead investigator for DOE’s Scientific Discovery through Advanced Computing (SciDAC) program, spoke about his project, Nuclear Computational Low-Energy Initiative, or NUCLEI. It attempts to push the studies of neutron-rich nuclei, the fission of heavy nuclei, and the key nuclear physics issues in neutron stars and tests of fundamental symmetries.

John Mandrekas, the DOE program manager for the Theoretical Plasma Physics Division within the Office of Fusion Energy Sciences, discussed “High-Performance Computing in Fusion Energy Sciences.” Mandrekas’ program provides the research funding for some OLCF users such as C. S. Chang from the Princeton Plasma Physics Laboratory and Brian Wirth from the University of Tennessee and ORNL.

“HPC is an essential tool for advancing the mission of the Fusion Energy Sciences program and for meeting its goal of developing the predictive capability needed for a sustainable fusion energy source,” Mandrekas said.

The next session, organized by ORNL’s Scientific Computing Group Leader, Tjerk Straatsma, dealt with the deployment of current technologies and near-future supercomputers being deployed to solve integrated compute and data scientific challenges. Joining him were Sadaf Alam from the Swiss National Supercomputing Centre (CSCS), a former ORNL scientist and current head of HPC Operations at CSCS, and Katie Antypas, services department head at the National Energy Research Scientific Computing Center (NERSC) at Lawrence Berkeley National Laboratory.

Antypas discussed NERSC’s newly announced supercomputer, Cori, a Cray XC powered by Intel’s next-generation Xeon Phi processor—a machine projected to be 10 times more powerful than NERSC’s current Cray XE6 supercomputer, Hopper. Cori is scheduled for completion by mid-2016.

The third session featured leaders from computer vendor partners such as Cray, NVIDEA, IBM, and Intel discussing what technologies and architecture are expected for future machines, providing a view on both the challenges and opportunities for future users and operators of these anticipated systems.

“Mathematics and Computer Science Challenges for Big Data, Analytics, and Scientific Challenges” rounded out the last of the sessions. Lori Diachin, an applied mathematician from the Center for Applied Scientific Computing at Lawrence Livermore National Laboratory, talked about her SciDAC institute, FASTMath, for which she is the principle investigator. FASTMath is a powerful resource that offers scalable mathematical algorithms and software in concordance with scientific HPC applications. Many OLCF users utilize the math libraries and products offered by FASTMath to increase their productivity on Titan and other OLCF computing resources.

Talk is Cheap

The conference agenda also included a poster and networking session that included OLCF’s Supada Laosooksathit and Valentine Anantharaj, who presented their respective posters, “Enabling High-Throughput Simulation and Data Analysis for the ALICE Experiment on Titan,” and “End-to-End Computing Using Functional Partitioning: A Community Earth System Model Case Study.”

Other noteworthy participants include JJ Chai and Sophie Blondel from the Computer Science and Mathematics Division with their respective posters, “Functional Phylogenomics Analysis of Bacteria and Archaea Using Consistent Genome Annotation with UniFam,” and “Xolotl: A Plasma Surface Interaction Simulator.” Jaron Krogel from the Materials Science and Technology Division presented a poster on “Ab-initio Many-Body Calculations for Outstanding Problems in Materials.”

The conference reported a record high attendance of 150, due greatly to a partnership with Japan’s RIKEN Institute of Physical and Chemical Research. RIKEN staff at several facilities across Japan devoted to research in engineering and computational science joined the conference via satellite to participate in a workshop about the co-design of exascale applications, in which software and hardware designers got together to communicate common needs and capabilities.

“Research scientists and engineers need to have an effective conversation between themselves and the designers of the computer hardware and software they will use to figure out the most effective ways to jointly ‘codesign’ future supercomputers and scientific applications,” Wells said.

Thanks to events such as the Smoky Mountain Conference, communication and collaboration turn today’s ideas into tomorrow’s reality. As HPC users continue to make improvements, the world moves that much closer to the exaflop—an age that will push our understanding of science far beyond what we previously thought possible. —Jeremy Rumsey

Oak Ridge National Laboratory is supported by the US Department of Energy’s Office of Science. The single largest supporter of basic research in the physical sciences in the United States, the Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.